20 Jan

Introduction

batch workloads are common in any large scale applications, due to the huge data and long processing to some of the activities, the compromise between processing time and the cost is always the challenge, especially when your processing time is not constant across the day and you need a lot of resources for a small amount of time.AWS has a wide range of services that are advantageous for batch workloads. AWS introduced AWS Batch that makes provisioning compute resources flexible and simple, and processing is faster. In this blog post, we will explore this service and see how to utilize it in our real production life. with batch processing, you run jobs asynchronously and automatically across one or more computers. in many scenarios, they may dependencies, which makes the sequencing and scheduling of multiple jobs complex and challenging

What’s AWS Batch?

AWS Batch is a fully managed aws services. It manages the queuing and scheduling of your batch jobs, and the resources required to run your jobs. One of AWS Batch’s great strengths is the ability to manage instance provisioning as your workload requirements and budget needs change. AWS Batch takes advantage of AWS’s broad base of computer types. For example, you can launch compute based instances and memory instances that can handle different workload types, without having to worry about building a cluster to meet peak demand.

AWS Batch Features:

- Dynamic compute resource allocation and scaling: You can configure your AWS Batch Managed Compute Environments with requirements such as type of EC2 instances, VPC subnet configurations, the min/max/desired vCPUs across all instances, and the amount you are willing to pay for Spot Instances as a % of the On-Demand Instance price.

- Support for popular workflow engines: such as Pegasus WMS and Lui

- The priority of Execution: AWS batch enables you to set up multiple queues with different priority levels.

- Integrated monitoring and logging: You can view metrics related to computing capacity, as well as running, pending, and completed jobs. Logs for your jobs are available in the AWS Management Console and are also written to Amazon CloudWatch Logs.

- Powerful ACL Permissions: AWS Batch uses IAM to control and monitor the AWS resources that your jobs can access, such as Amazon S3 Buckets. Through IAM, you can also define policies for different users in your organization.

- Cost Efficiency: With the Spot instance Batch Integration, you can scale and register your ECS container instances in the most cost-efficient way by leveraging Spot instances, or you can use a blend of Spot and On-Demand, as well as utilize existing Reservations on the fly.

AWS Batch Use Cases:

Financial Service: such services require high-performance computing, for example Post-Trade Analytics. Fraud Surveillance.

Medial & Life Sciences: Drug Screening and DNA Sequencing.

Media: Rendering, Transcoding, and Media Supply Chain.

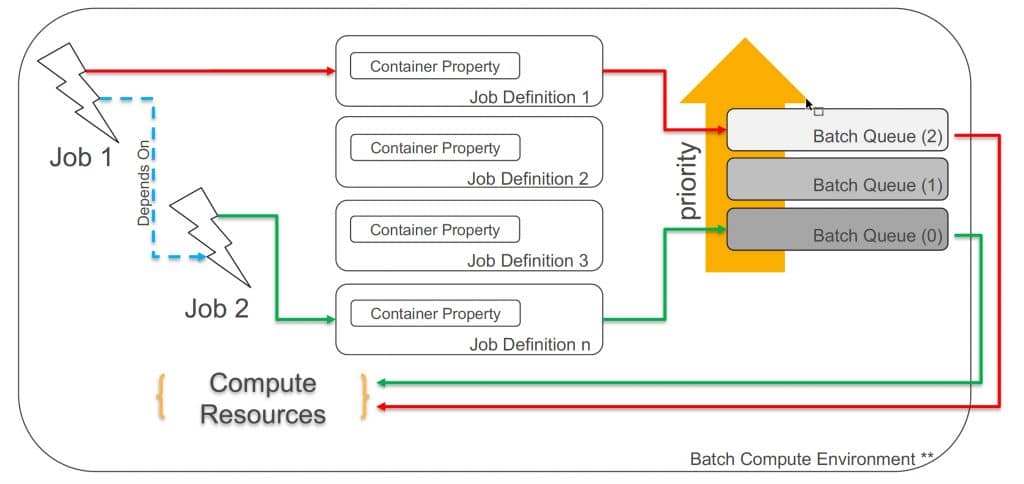

AWS Batch Components

• Compute environments: Job queues are mapped to one or more environments whether it’s a managed or non managed one.

• Job queues: Jobs are submitted to a job queue, where they reside until they are able to be scheduled to a compute resource. Information related to completed jobs persists in the queue for 24 hours.

• Job definitions: AWS Batch job definitions specify how jobs are to be run. Some of the attributes specified in a job definition:

- IAM role associated with the job

- vCPU and memory requirements

- Mount points

- Container properties

- Environment variables

- Retry strategy

• Jobs: Jobs are the unit of work executed by AWS Batch as containerized applications running on Amazon EC2.

• Job Scheduler: The scheduler evaluates when, where, and how to run jobs that have been submitted to a job queue.

Job States

Jobs submitted to a queue can have the following states:

- SUBMITTED: Accepted into the queue, but not yet evaluated for execution

- PENDING: Your job has dependencies on other jobs which have not yet completed

- RUNNABLE: Your job has been evaluated by the scheduler and is ready to run

- STARTING: Your job is in the process of being scheduled to a compute resource

- RUNNING: Your job is currently running

- SUCCEEDED: Your job has finished with exit code 0

- FAILED: Your job finished with a non-zero exit code, was canceled or terminated.

AWS Batch & AWS Step Functions:

- AWS Batch has a concept of Job Definitions, which allows us to configure the Batch jobs. While each job must reference a job definition, many of the parameters that are specified in the job definition can be overridden at runtime.

- AWS Step Functions provides a reliable way to coordinate components and step through the functions of your application. Step Functions offers a graphical console to visualize the components of your application as a series of steps. It automatically triggers and tracks each step, and retries when there are errors, so your application executes in order and as expected, every time.

- AWS Step Functions has direct, seamless integration with AWS Batch and supports both async and sync Batch job execution. This alleviates the need to create a custom solution to track the state of the jobs and their completion. We can just rely on the final parent batch job to be complete which consists of n child jobs.

- AWS Batch is very helpful when creating microservices and serverless solutions

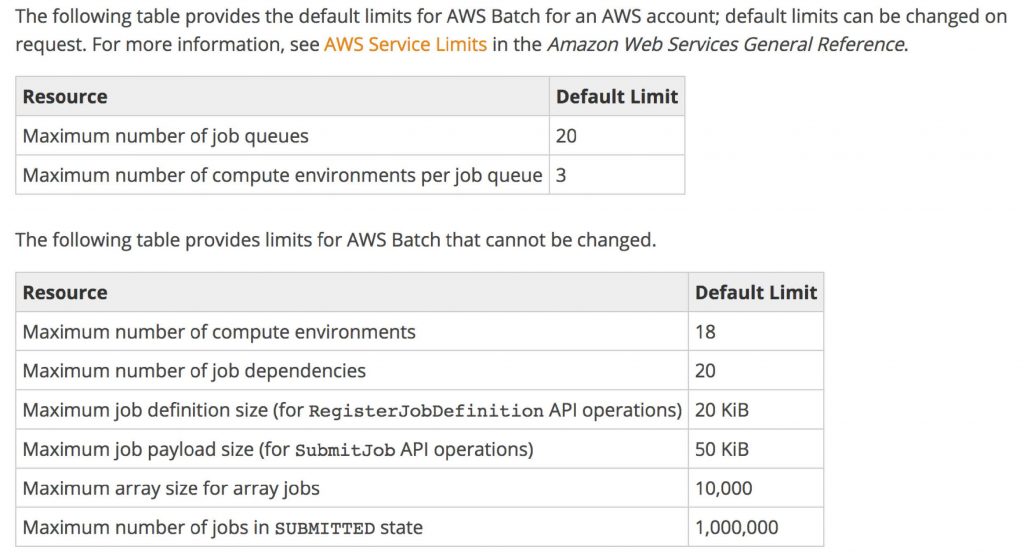

Resource Limits:

to know more about AWS batch resource limitation you check this URL we have appended a screenshot of the limits though so you can have all the info needed at one place

Conclusion:

AWS Batch provisions your instances. This allows you to make better throughput and cost trade-offs depending on the sensitivity of your workload. Feel free to experiment Try AWS Batch on your own to get an idea of how they help you run your specific workload. AWS PS managed services can definitely help you to set the well architect design fro your infrastructure.