26 Jan

[ad_1]

Electronic Design Automation (EDA) workloads require high computing performance and a large memory footprint. These workloads are sensitive to faster CPU performance and higher clock speeds since the faster performance allows more jobs to be completed on the lower number of cores. At AWS re:Invent 2020, we launched Amazon EC2 M5zn instances which use second-generation Intel Xeon Scalable (Cascade Lake) processors with an all-core turbo clock frequency of up to 4.5 GHz, which is the fastest of any cloud instance.

Our customers have enjoyed the high single-threaded performance, high-speed networking, and balanced memory-to-vCPU ratio of EC2 M5zn instances. They have asked for instances that will leverage these features while also providing them a greater memory footprint per vCPU.

Today, we are launching Amazon EC2 X2iezn instances, which use the same Intel Xeon Scalable processors as M5zn instances, with an all-core turbo clock frequency of 4.5 GHz and up to 1.5 TiB of memory, which is the fastest of any cloud instance for EDA workloads. These instances are capable of delivering up to 55 percent better price-performance per vCPU compared to X1e instances.

X2iezn instances offer 32 GiB of memory per vCPU and will support up to 48 vCPUs and 1536 GiB of memory. Built on the AWS Nitro, they deliver up to 100 Gbps of networking bandwidth and 19 Gbps of dedicated Amazon EBS bandwidth to improve performance for EDA applications.

You might have noticed that we’re now using the “i” suffix in the instance type to specify that the instances are using an Intel processor, “e” in the memory-optimized instance family to indicate extended memory, “z” which indicates high-frequency processors, and “n” to support higher network bandwidth up to 100 Gbps.

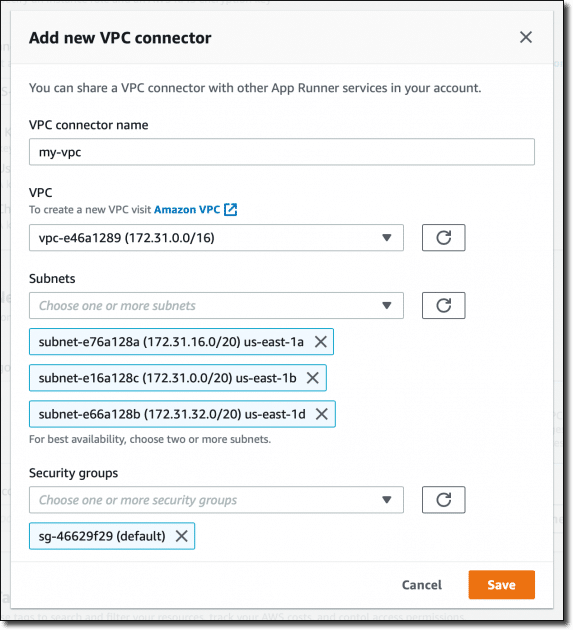

X2iezn instances are VPC only, HVM-only, and EBS-Optimized, with support for Optimize vCPU. As you can see, the memory-to-vCPU ratio on these instances is the same as that of previous-generation X1e instances:

| Instance Name | vCPUs | RAM (GiB) | Network Bandwidth (Gbps) | EBS-Optimized Bandwidth (Gbps) |

| x2iezn.2xlarge | 8 | 256 | Up to 25 | 3.170 |

| x2iezn.4xlarge | 16 | 512 | Up to 25 | 4.750 |

| x2iezn.6xlarge | 24 | 768 | 50 | 9.5 |

| x2iezn.8xlarge | 32 | 1024 | 75 | 12 |

| x2iezn.12xlarge | 48 | 1536 | 100 | 19 |

| x2iezn.metal | 48 | 1536 | 100 | 19 |

Many customers will be able to benefit from using X2iezn instances to improve performance and efficiency for their EDA workloads. Here are some examples:

- Annapurna Labs tested the X2iezn instances with Calibre’s Design Rule Checking, which has shown a 40 percent faster runtime compared to X1e instances, and a 25 percent faster runtime over R5d instances.

- Astera Labs is a fabless, cloud-based semiconductor company developing purpose-built CXL, PCIe, and Ethernet connectivity solutions for data-centric systems. They were able to see performance gains of up to 25 percent compared to similar EDA workloads running on R5 instances.

- Cadence tested the X2iezn instances using their Pegasus True Cloud feature, which allows designers to run physical verification jobs on the cloud and observed a 50 percent performance improvement over R5 instances. They see X2iezn instances as an excellent environment for testing EDA workloads.

- NXP Semiconductors worked with AWS to run their Calibre and Spectre workloads on Amazon EC2 X2iezn instances, which measured 10-15 percent higher performance using X2iezn instances compared to their on-premises, Xeon Gold 6254 with max turbo frequency of 4.0GHz.

- Siemens EDA worked with AWS to test the new Amazon EC2 X2iezn HPC/EDA focused instances with the industry performance and sign-off leader Calibre evaluating advanced node DRC workloads. They were pleased to demonstrate performance improvements of up to 14% using the 4.5 GHz all core turbo frequency of X2iezn instances for all VMs in the run. Additionally, they successfully demonstrated the use of a heterogeneous server configuration using the X2iezn as the primary node and other lower memory VMs for remote compute – providing an 11% speed up and attractive value. These results confirmed the X2iezn is a good fit for primary server EDA workloads for Calibre Physical and Circuit verification applications.

- Synopsys IC Validator provides highly scalable high-performance physical verification signoff. They achieved 15 percent performance improvement, scalability to 1000s of cores, and 30 percent better efficiency using IC Validator’s unique elastic CPU management technology versus R5d instances.

Things to Know

Here are some fun facts about the X2iezn instances:

Optimizing CPU—You can disable Intel Hyper-Threading Technology for workloads that perform well with single-threaded CPUs, like some HPC applications.

NUMA—You can make use of non-uniform memory access (NUMA) on x2iezn.12xlarge instances. This advanced feature is worth exploring if you have a deep understanding of your application’s memory access patterns.

Available Now

Amazon EC2 X2iezn instances are now available in the US East (N. Virginia), US West (Oregon), Asia Pacific (Tokyo), and Europe (Ireland) Regions. You can use On-Demand Instances, Reserved Instances, Savings Plan, and Spot Instances. Dedicated Instances and Dedicated Hosts are also available.

To learn more, visit our EC2 X2i Instances page, and please send feedback to the AWS forum for EC2 or through your usual AWS Support contacts.

– Channy

[ad_2]

Source link